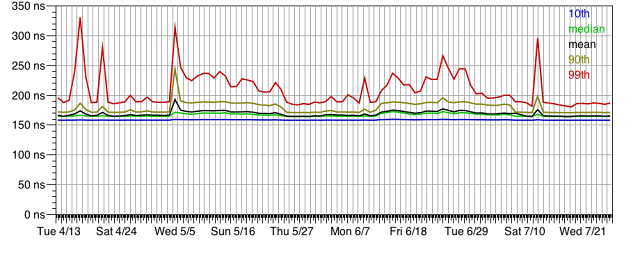

As part of their investigation into the

RDS latency anomaly, Amazon asked me for a log of RDS request latencies. Despite the fact that the entire purpose of the benchmark program is to measure, record, and analyze request latencies, there was no way to obtain an explicit log. I had to modify the program and push a new build.

This spurred me to think more systematically about my approach to data gathering. When developing and maintaining any sort of online service, it's important to be able to analyze the past and present behavior of your servers. The primary tool for this purpose is usually some sort of server log (e.g. stdout or syslog), though many other tools are used as well. In this post, I'll use the generic term "instrumentation" to cover all mechanisms that enable inspection of the past behavior of a server system.

The questions one might have about a system's behavior are virtually unbounded, and a plethora of instrumentation mechanisms have been invented to answer them. Too many, really: real-world systems often use a hodgepodge of instrumentation mechanisms. This is difficult to learn and use, and yields only patchwork functionality. In this post, I look for a more organized approach.

How do we use instrumentation?

What sorts of questions might one want to answer using server instrumentation? It's a broad space. Here are some representative examples:

Why was that slow? You've loaded a page from your own service, and you notice that it took several seconds to appear. It's supposed to be faster. What went wrong? Where was the time spent? Note that you might not know, a priori, specifically which server handled your request. Also note that the request might have invoked nested requests (e.g. to a database server); if the nested requests were slow, you might have to look into the database server to understand the problem.

Why didn't that work? A user reports that they got an error message while using your site. They don't remember the details, but they can tell you their account name, and roughly when the error occurred. What happened exactly? You'd like to dig up the request that triggered the error, error details (e.g. stack crawl, relevant parameters and server state), and the user's interaction history leading up to the error. Again, you might not know which specific server was involved, and the error might have bubbled up from a deeper server tier.

Are my servers healthy right now? What is the average request latency? Are we serving [m]any errors? For a variety of server metrics, are the current values in line with past behavior?

I just tweaked something, what was the impact? How did server metrics change when the tweak was made? Any speedup or slowdown? Are any new errors occurring?

What is this user's history on the site? Perhaps you've noticed what looks like suspicious behavior from a particular user -- you suspect they may be a spammer. How have they been using your service?

What is this server up to? Suppose CPU usage spikes on one of your servers. What is that server up to -- what operations is it performing, is there any unusual activity?

How many unique daily users does my site have? This leads into more complex "analytics" questions -- breakdown of visitors by geographic region, referral source, trajectory through the site, etc.

Which records were modified by module X during a certain time period? You might need to know this to clean up after a bug.

On what occasions has a certain operation been performed, and how long did it take on each occasion -- the question Amazon asked me about RDS requests.

Why instrumentation is needed

Logging, event counters, and other "historical record" instrumentation mechanisms are not the only tools for understanding the behavior of a software system. There are also debuggers, black-box monitoring, scripted tests, and many other approaches. However, proactively recording the internal behavior of live production servers can often be invaluable:

- It allows you to investigate problems that you can't reproduce. ("This user says X happened to her yesterday morning.")

- Corollary: for problems that you can reproduce, it can save you the often-considerable hassle of reproducing them.

- It allows you to investigate problems that only arise in the live production environment. ("Performance degrades every day during the morning traffic peak. Our artificial load generator doesn't reproduce the pattern.")

- It allows you to detect patterns over time. ("The site seems slower lately... has something changed?")

Instrument everything

Given that you are going to instrument your program, what should you instrument? Which events are worth logging? Which variables should be recorded? It's hard to know in advance. If you instrument too much, you will have a flood of data that may be hard to interpret, and you may even overload some server resource such as disk bandwidth. If you instrument too little, you may not get the information you need.

We have at least two tools available to avoid this dilemma. One is configurable instrumentation -- the ability to dynamically disable some instrumentation points at runtime, perhaps via a configuration file. (Configurable "logging levels" -- e.g. discard all log messages at FINE and below -- are a simple example of this.) If you liberally instrument your program, configurable instrumentation allows you to throttle the output down to a manageable level, while retaining the ability to enable detailed output on a moment's notice.

The second tool is temporal winnowing -- retain detailed data for a short period, then thin it out. You might retain FINER logging in RAM for 100 seconds, FINE on local disk for a day, and INFO in a central repository for a month. This provides detailed information for the recent past (the period you're most often interested in), while minimizing overall storage costs.

It's also worth recalling that disks have gotten very cheap. Suppose we allow ourselves 1MB/sec of (moderately) compressed log output. That allows for a

lot of logging per request, even on a server handling hundreds or thousands of requests per second. And yet retaining that output for a week requires only 600GB of disk space -- a fraction of a single modern hard disk. So: "when in doubt, write it out".

A unified data model

To answer the sorts of questions mentioned above, we need several kinds of information:

- Traditional logging -- a textual record of events of interest.

- Rate counters -- how often do various events occur?

- Latency measurements -- how long does each operation of interest take to complete?

- Latency breakdowns -- how does the time spent in an operation break down into sub-operations?

- Time-varying values -- what is the history of, say, heap size or CPU utilization?

Here is an attempt to unify these under a single mechanism. I use the traditional logging model as my starting point; it's simple, easy to understand, and has stood the test of time. Imagine that we are writing a servlet to display some sort of dynamic content. We can sprinkle in a few log calls (using a hypothetical "Log" class whose precise API I leave unspecified):

Log.info("rendering item % on behalf of user %", itemId, userId);

if (...) {

Log.fine("case 1; % subrecords", count);

...

} else {

Log.fine("case 2; % subrecords", count);

...

}

Log.fine("finished rendering item");

Nothing novel here. Clearly this provides our first category of information -- a textual log of events of interest. With a little pattern matching, we can get rate counters as well. For instance, to determine the number of item pages that have been displayed, we can count the number of log messages that begin with "rendering item". (If this is too fragile for your taste, you could adopt the convention of beginning each log message with a stable identifier --

Log.info("pages/render/item % on behalf of user %", itemId, userId)).

For latency measurements, we need to identify the start and end of an operation of interest. This can be done by indicating which Log calls mark an operation start or end. Here's the same code fragment again, with two slight tweaks (highlighted in bold):

Log.startInfo("rendering item % on behalf of user %", itemId, userId);

...

if (...) {

Log.fine("case 1; % subrecords", count);

...

} else {

Log.fine("case 2; % subrecords", count);

}

The log calls at the start and end of the render operation have been explicitly identified. (This naming convention -- "startInfo" and so forth -- is clumsy, especially when the start and end are logged at different severity levels. For an actual implementation, I would try to come up with something better. I've also glossed over many other details, such as what happens if an exception is thrown between the start and end calls.) And the message text has been omitted for the "end" call, as the Log class now has enough information to generate an appropriate message automatically. The output from this code might look something like the following:

05:01:37.109 rendering item 8461 on behalf of user 196584

05:01:37.110 | case 1; 9 subrecords

05:01:37.254 | [completed in 0.145 seconds]

If Log.startXXX / Log.endXXX calls are nested, we can easily extract the fourth data category, hierarchical latency breakdowns. (That leads to the interesting question of how to track sub-operations that execute on a different thread, or even in a different server; Google's

Dapper project shows an approach.)

Finally, we have time-varying values. These can be recorded by periodically emitting them into the log:

Log.info("free memory currently %", Runtime.freeMemory());

The history can then be obtained using a simple pattern match. Again, for more reliably pattern matching, we could use a stable identifier instead of the prose string "free memory currently".

This unified "everything in the log" approach provides many benefits. For instance:

- A single Log call enables multiple forms of analysis -- you get traditional logging, rate counting, and latency graphing with a single hook.

- There's only one instrumentation system to learn and deploy.

- "Joins" become possible (e.g. graph the latency of operation X, considering only invocations made during requests from user Y).

- The log becomes comprehensive; you can see all available data in one place, rather than jumping around between different instrumentation systems.

Infrastructure requirements

To answer questions about system behavior using nothing more than log output would require significant infrastructure. Logs would need to be stored, rotated, cataloged, summarized or indexed, and analyzed in fairly sophisticated ways. In effect, I'm proposing a data warehousing approach to log management (despite knowing nothing about the field of data warehousing). I'll dive into this in a later post.

Though the infrastructure required to support this approach is significant, it is important not to let the infrastructure drive the overall design. The infrastructure only has to be written once, but it will be used every day. This "use" falls into two categories:

1. Instrumenting a program -- adding Log calls while writing code.

2. Analyzing a system -- pulling up logs and graphs to understand what's going on.

The primary goal should be to make these two activities as cheap and easy as possible; let the infrastructure bend itself toward that goal.

Existing work?

There must be literature about logging and system forensics, but I'm not especially familiar with it. If you're reading this and you can point me to any interesting papers, I'd love to see them. (You can comment on this post, or e-mail me at steve at snewman.net.) I'm especially interested in unified approaches to system instrumentation (encompassing logging, performance measurement, etc.), and real-world solutions for multi-server systems.